A Brief Story

You are having dinner at a restaurant. Your waitress arrives to take your order, asking how you are.

“Fine,” you reply. “How are you?”

“Great!” she responds with a smile that is perhaps a bit too wide and stiff. You notice a subtle tension around her eyes. You infer that she is not, in reality, great but is masking her distress. Perhaps her boss has just yelled at her or her car broke down on the way to work.

Humans are incredibly adept at interpreting facial expressions. We can even tell the difference between deliberate expressions—expressions that are intentional or acted, such as smiling despite feeling anxious or disappointed—and spontaneous expressions which are unconscious and genuine manifestations of felt emotion (Ekman & Rosenberg 1997).

Background

While most humans are innately talented at displaying and interpreting facial expressions, embodied conversational agents (ECAs) rarely, if ever, achieve the same range or nuance found in human expression.

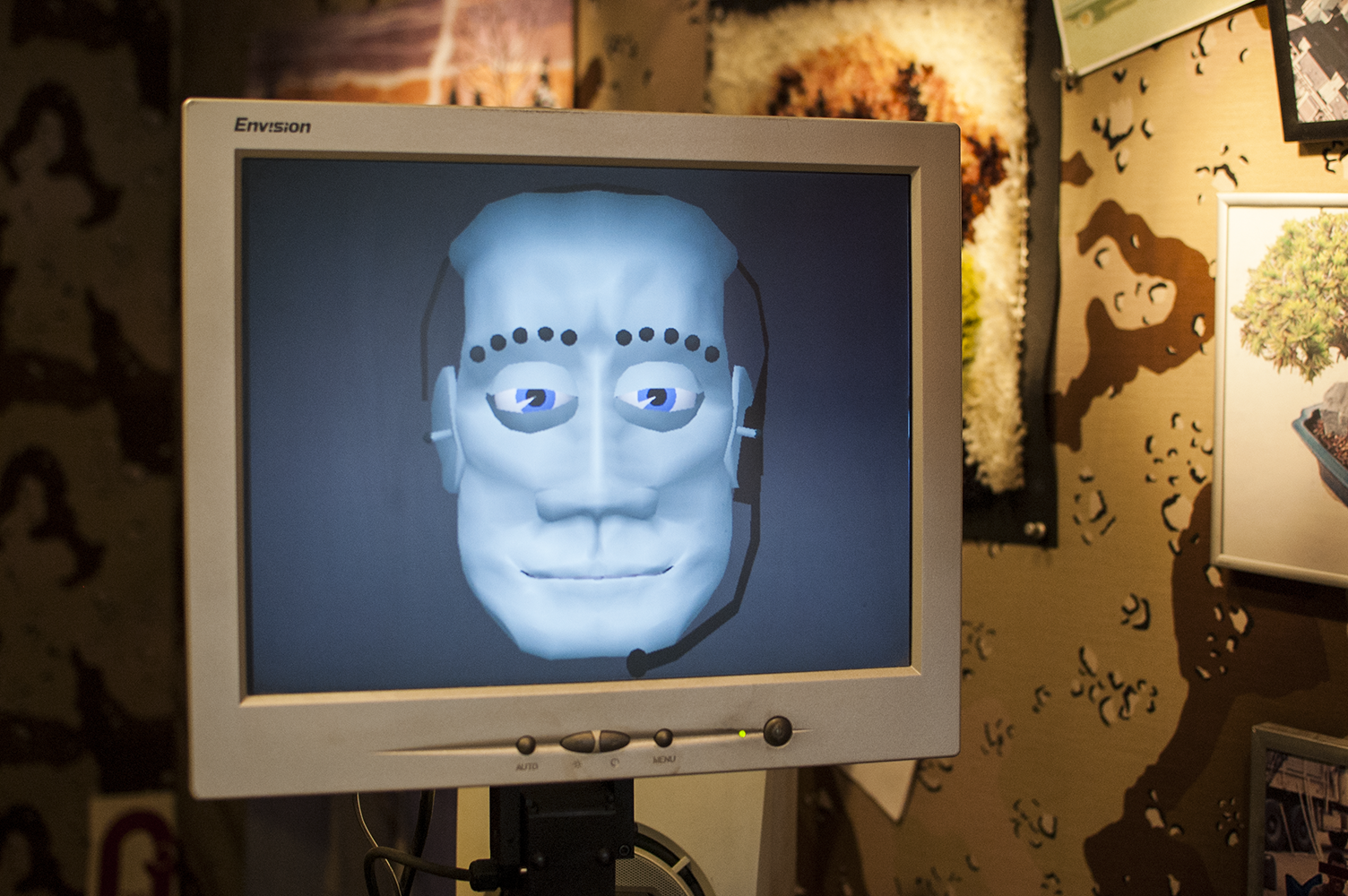

Tank, an ECA, is a product of the “Roboceptionist” project at Carnegie Mellon University. Tank and his predecessors are social robots with rich and emotional backstories. Ideally, people using Tank will explore his story and feel empathy for his character.

Like many ECA's, Tank is equipped with an expressive face which is programmed to display a wide variety of emotions. The emotion to display at any given moment was determined through a number of factors, including long term pre-programmed "stories", recent conversation history, and the phrase Tank is currently saying. A phrase may be tagged with multiple sequential emotions so that, as the phrase is spoken, Tank's face must quickly switch between several distinct emotions.

Our team interviewed community members about their feelings toward Tank. While many people found him helpful, some also confessed that they found him “creepy” or “awkward.” Through our interviews, we discovered that much of this awkwardness came down to two things: 1) unnatural-seeming facial expressions and movements and 2) mismatch between the emotions suggested by the spoken text and the perceived emotions.

Given this apparent mismatch between Tank's intended expression and many viewer's receptions, our goal was to understand how we might increase empathy for Tank and other ECAs by exploring how people interpret the expression of complex and nuanced emotion by an animated face. In particular, we wanted to find a way to calibrate a face to make sure emotions were interpreted correctly.

Inspiration

In his book, Making Comics, Scott McCloud adapts Paul Eckman's classification of human emotion (joy, sadness, anger, disgust, fear, and surprise) to create a framework for expressing emotion in simple, drawn faces. McCloud describes in detail how anatomical muscle groups within the face create each of Eckman’s six expressions. Additionally, McCloud describes how the fundamental emotions can combine to create complex emotions (e.g. joy + sadness = nostalgia) (McCloud 2011).

Randall Munroe, the author of the webcomic, xkcd, released a survey asking participants to name a random color displayed on their screen from the RGB color space. He collected five million color names from 222,500 user sessions. By identifying the most popular responses, he was able to draw a map identifying the “boundaries” of each commonly identified color on a two-dimensional plane.

Process

Our group conducted a descriptive design study to understand how people identify complex emotion in an animated face. Specifically, we explored Eckman’s six expressions (joy, sadness, anger, disgust, fear, and surprise) combined in pairs to form 16 unique, complex expressions.

We first developed an expressive web-based facial simulator capable of complex expression. It can generate expressions that combine Eckman’s six fundamental expressions in various proportions and levels of arousal (e.g. 30% fear + 40% sadness + 20% disgust).

We created a web-based survey that displayed one of the complex expression to the participant. The participant was asked to label the displayed emotion. As of this writing, we have 3,392 expressions were identified from 337 unique user sessions.

Data & Visualization

We initially visualized our results as scatter plots, as shown below. We highlighted the most popular responses and grouped them with convex hulls to emphasize the spatial clustering of dominant terminology.

Move your mouse around the scatter plot to see the corresponding face.

Insights

- When surprise is a component of a complex expression, participants were more likely to identify it than the other expression. This trend may, in part, result from how the face is rendered. Even in the neutral position, there is a slight gap between the iris of the eye and the top lid, which possibly contributes to the look of surprise.

- When negative expressions (particularly fear, sadness, and disgust) were combined with the positive expression, joy, the participant was more likely to use a negative label to describe the outcome.

- Usually, participants were more likely to “correctly” identify an expression when it consisted of one high-valence expression combined with a low-valence expression (e.g. 90% anger + 10% sadness). One notable exception is the combination of anger and disgust. Both are correctly identified by participants, but there is very little obvious clustering of the data points within the graph.

- While fear, anger, and disgust are all perceived more strongly than joy, fear is perceived more strongly than anger and disgust when paired with those emotions in particular.